Introduction

I need to know how many subwikis can XWiki support without freezing or crashing. I prepared some kind of stress test (note that I’m not familiar with these kind of things) in order to massively create subwikis into a XWiki instance. By massively, I mean 1000 which seems to be sufficient (you will see that in the results).

My XWiki instance (5.3-milestone-2) is a local instance, on the 8080 port, runned on Jetty with HSQLDB.

Since my first method doesn’t work pretty well, I tried a second way to do this stress test but which may have a bias of measure.

First method - Velocity script called by a curl request

The Velocity script is using the wiki service which is new in the 5.3 version

of XWiki. With this service, you can do a lot of interesting things:

-

exists(String)- Test the existence of subwiki -

getById(String)- To get the wiki descriptor -

createWiki(String, String, String, boolean)- Create a new wiki

This is the Velocity code I used and stored into

WikiStressTestCode/FromRequest. The page take a HTTP request in entry with

two parameters:

* wikiname - the name of the created wiki

* wikinumber - the number of the created wiki (useful for a stress test)

{{velocity}}

#set($wikiName = $!request.getParameter('wikiname'))

#set($wikiNumber = $!request.getParameter('wikinumber'))

#set($wikiId = "$!wikiName$!wikiNumber")

#if($wikiId.isEmpty())

#set($wikiName = 'Null')

#set($wikiNumber = 0)

#set($wikiId = 'null')

#end

#if($services.wiki.exists($wikiId))

#set($wiki = $services.wiki.getById($wikiId))

#else

#set($wiki = $services.wiki.createWiki($wikiId, $wikiId, 'xwiki:XWiki.Admin', true))

#end

#if($wiki)

#set($wikiPrettyName = "Wiki $wikiName #$wikiNumber ")

#set($discard = $wiki.setPrettyName($wikiPrettyName))

#set($wikiDescription = "Wiki $wikiName #$wikiNumber created for the stress test")

#set($discard = $wiki.setDescription($wikiDescription))

#set($discard = $services.wiki.saveDescriptor($wiki))

#else

#set($discard = $response.sendError(404, 'Error in creating the wiki'))

#end

{{/velocity}}

After this page has been created, you can call the page with a curl request as following;

#!/bin/bash

NUMBER=1

NAME=wiki

if [ $# -ge 1 ]

then

NUMBER=$1

fi

if [ $# -ge 2 ]

then

NAME=$2

fi

curl \

--silent \

--show-error \

--user Admin:admin \

-F "wikiname="$NAME \

-F "wikinumber="$NUMBER \

-o data/$NAME.html \

http://localhost:8080/xwiki/bin/view/WikiStressTestCode/FromRequestThis file only create one request and take the number and the name as optional parameters.

I call the create-wiki.sh script into a loop from a second script file

stress-test.sh. This second script file will measure the time of each request

in order to observe the evolution of the time to create a new subwiki.

#!/bin/bash

TEST_NUMBER=3

NAME=test

LOG_FILE=stress-test-request.log

if [ -f $LOG_FILE ]

then

rm $LOG_FILE

fi

if [ $# -ge 1 ]

then

TEST_NUMBER=$1

fi

for num in `seq 1 $TEST_NUMBER`

do

echo -n "Creating wiki "$Name" #$num..."

/usr/bin/time --format=%e --output=$LOG_FILE --append ./create-wiki.sh $num $NAME

TIME=`tail -n 1 $LOG_FILE`

echo " ("$TIME"s)"

doneAnd now, this is the result of this first method.

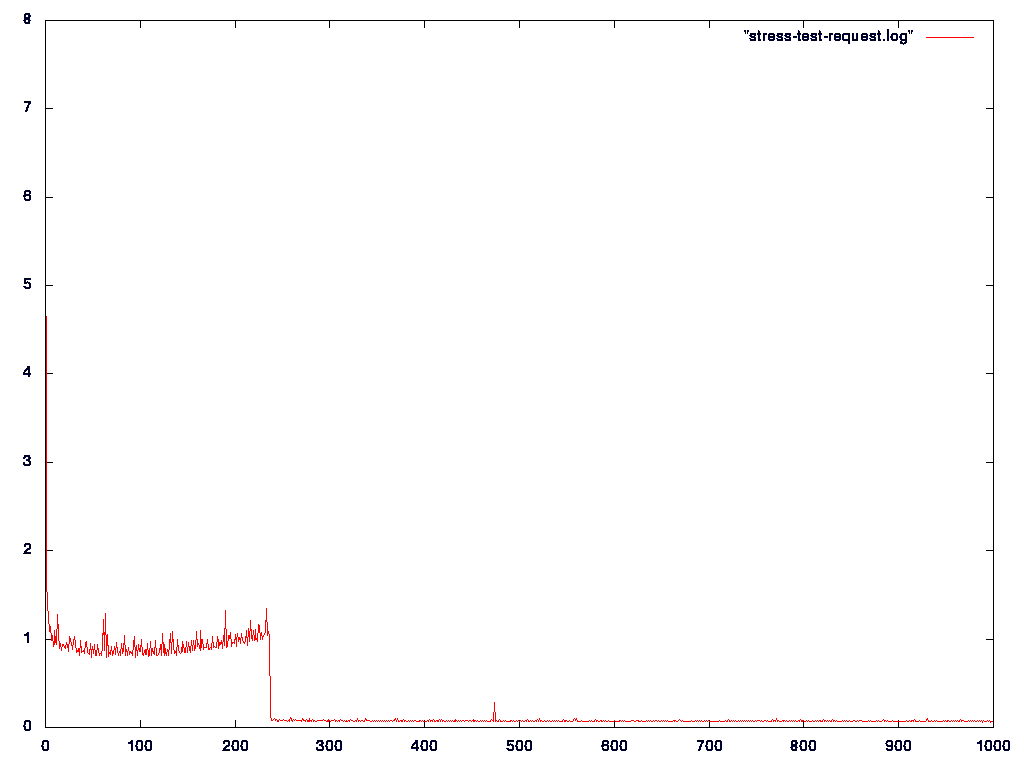

As you can see, around 250 creations, XWiki seems to not respond. And I can check on XWiki that the first 250 subwikis have been created but not the others. I suspect the indexation of either Lucene or Solr to be too much loaded and freezing the whole wiki.

Second method - Groovy script

The Groovy script in WikiStressTestCode.InsideGroovy is merely doing the same

as the Velocity script of the first method. However, it is not responding to a

request but creating is own loop for the creation of the wikis. The problem of

this method is that as long as the page is processing the iteration, the page is

loading and there is no possibility to know the state of the loop (like “How

many iteration there is?”).

This tricky part is done by writting the processing to a second page called

WikiStressTestCode.InsideGroovyResults.

The second problem is to measure the time of execution. This is done in Groovy

by using System.currentTimeMillis().

{{groovy}}

String wikiName = "test";

int testNumber = 1000;

resultsPage = xwiki.getDocument("WikiStressTestCode", "InsideGroovyResults");

resultsPage.setContent("");

resultsPage.save();

int wikiNumber = 1;

while(wikiNumber <= testNumber) {

begin = System.currentTimeMillis();

String wikiId = String.format("%s%s", wikiName, wikiNumber);

if(services.wiki.exists(wikiId)) {

wiki = services.wiki.getById(wikiId);

} else {

wiki = services.wiki.createWiki(wikiId, wikiId, "xwiki:XWiki.Admin", true);

}

if(wiki) {

String wikiPrettyName = String.format("Wiki %s #%s", wikiName, wikiNumber);

wiki.setPrettyName(wikiPrettyName);

String wikiDescription = String.format("Wiki %s #%s created for the stress test", wikiName, wikiNumber);

wiki.setDescription(wikiDescription);

services.wiki.saveDescriptor(wiki);

}

wikiNumber = wikiNumber + 1;

end = System.currentTimeMillis();

String time = String.format("%d", end-begin);

resultsPageContent = resultsPage.getContent();

resultsPage.setContent(String.format("%s\n%s", resultsPageContent, time));

resultsPage.save();

}

{{/groovy}}

To create an image, I can now load the page

WikiStressTestCode.InsideGroovyResults to get all measured times.

And now, this is the result of this second method.

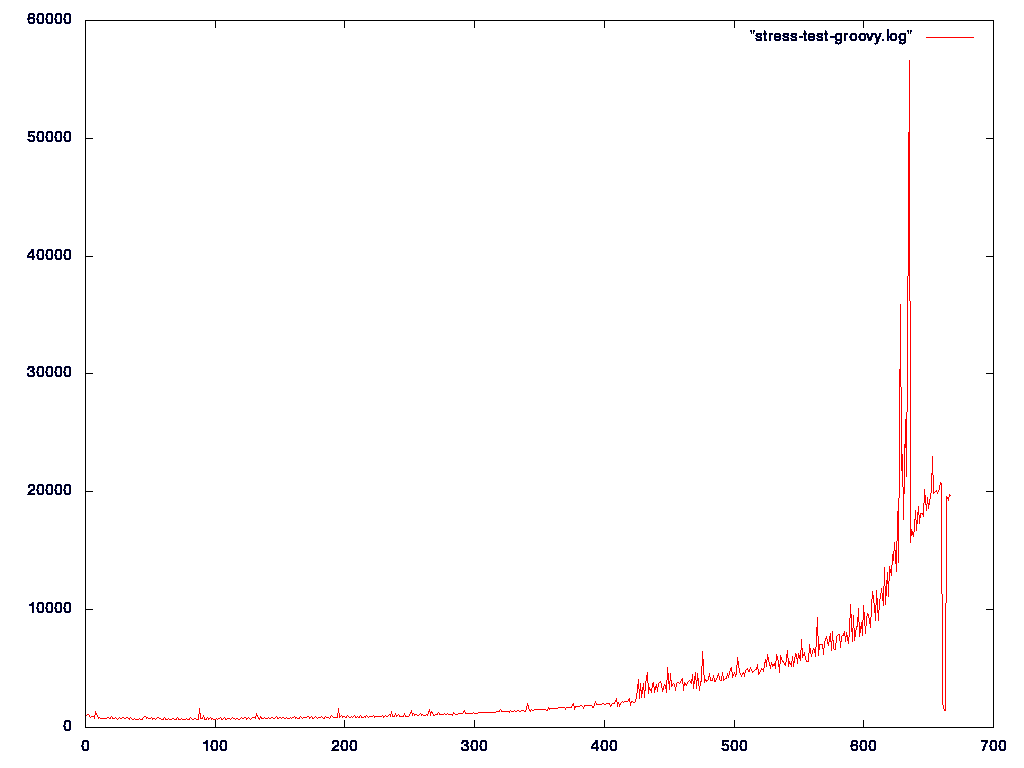

As you can see, I was not able to create my 1000 wikis. Almost 700 and crashed. First of all, the evolution seems to be as an exponential law, which is a bad news! However, we could level this conclusion given the fact that all subwikis are created in the same page: it is possible that the context of execution is growing (in memory) with a consequence on the subwikis creation. To simplify, the exponential law may be the consequence of my stress test and not from XWiki (or maybe both!).

Conclusion

You can find the XWiki code that has been used and scripts here:

On a server, you will probably be able to create around 1000 subwikis but the basic stress tests that I have made show that improvements should be done on XWiki before going further. Another method that I have not tested and would probably be the best for stress testing is to use JMeter.